SAGE

Collection

Smart Any-Horizon Agent for Long Video Reasoning

•

17 items

•

Updated

•

1

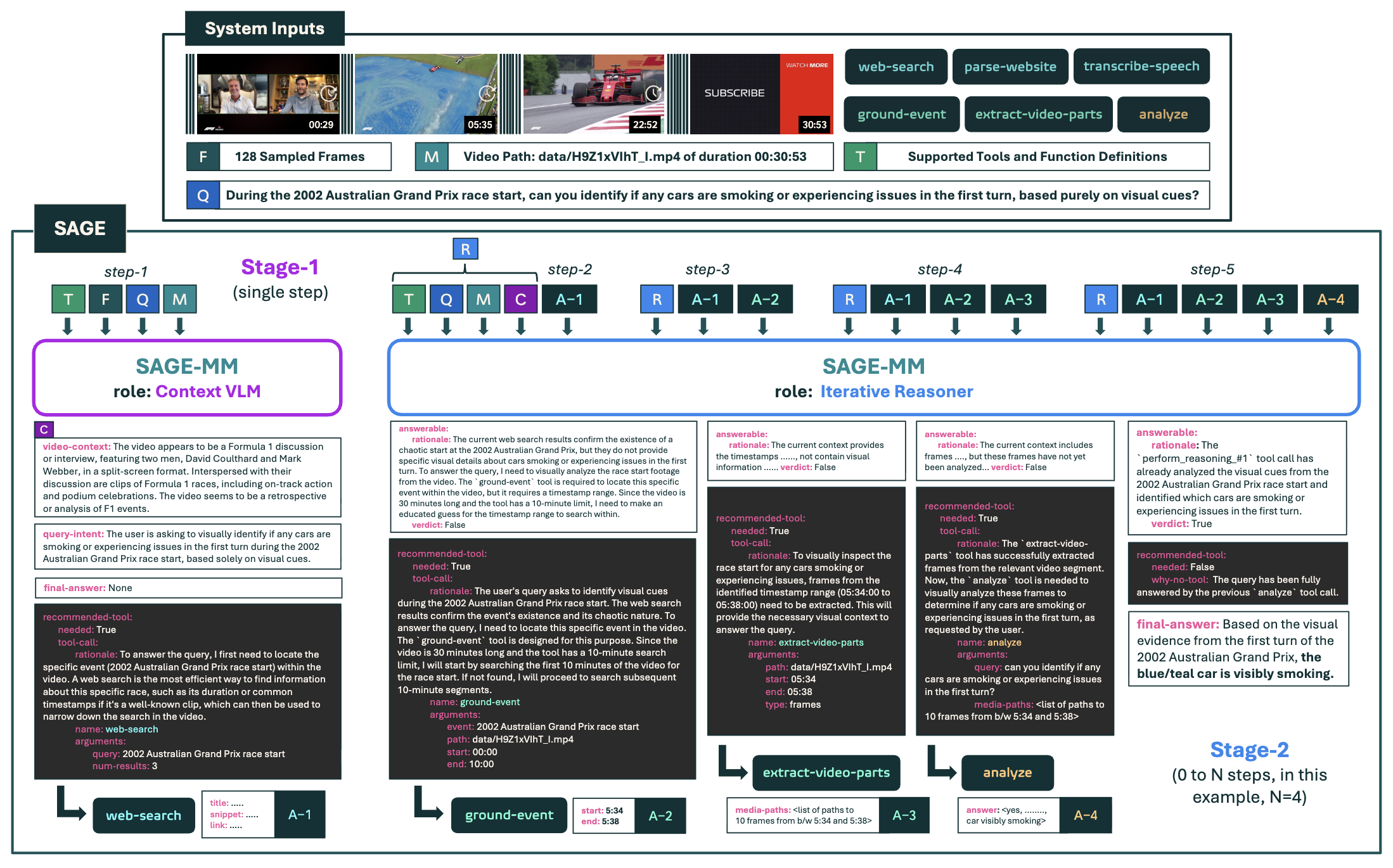

SAGE-MM operates as the core decision-maker within the SAGE system. It functions in two distinct stages:

The model is trained to generate JSON-formatted actions to invoke the following tools:

web-search: Search the internet for external knowledge (e.g., sports standings, cast lists).transcribe-speech: Perform ASR on specific timestamped segments of the video.ground-event: Locate start/end timestamps for specific visual events.extract-video-parts: Extract high-resolution frames or subclips from specific timestamps.analyze: Perform detailed visual analysis on extracted media.Note: SAGE-MM outputs JSON action strings. It requires a runtime environment (provided in our GitHub repo) to parse these strings, execute the tools, and feed the observation back to the model.

This model is licensed under Apache 2.0. It is intended for research and educational use in accordance with Ai2's Responsible Use Guidelines.